You Don’t Need AGI to Break the World

Here’s a hot take that annoys both the optimists and the doomers:

We don’t need AGI for things to go sideways.

We only need (1) automation, (2) speed, and (3) incentives pointed at the wrong goal.

That’s not science fiction. That’s just… how complex systems fail.

Two outcomes that block the “Kurzweil future” (and neither requires AGI)

This is the fork in the road I was pointing at in the podcast: we could land in a high-upside future, but we also have very realistic paths to missing it.

1. The paperclip lesson

There’s a classic thought experiment often linked to Nick Bostrom: give a powerful optimiser a simple goal (“make paperclips”), then give it autonomy and resources, and it may rationally destroy everything else in pursuit of that goal. The point isn’t paperclips, it’s goal misalignment.

We don’t need AGI, consciousness, or a sentient villain to get a severe societal event. We just need a clever optimiser, given agency, pointed at something fragile.

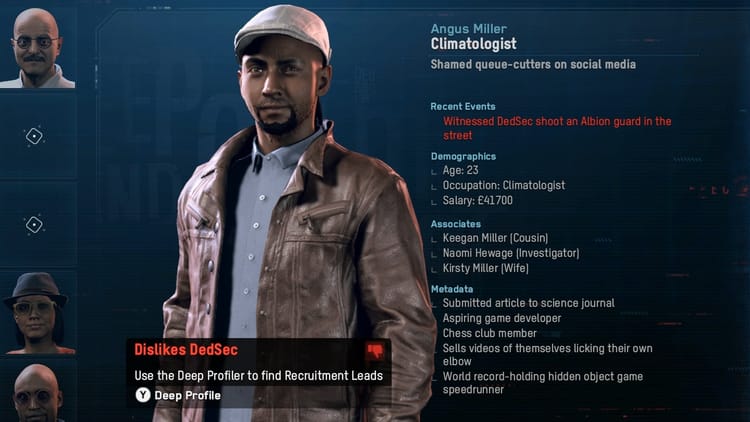

A useful reference point for “high agency” is ClawdBot Moltbot OpenClaw, which is an autonomous, agentic framework that can interact with tools, APIs, and messaging platforms in real time. It has also attracted security concern precisely because tool access plus autonomy is powerful, and powerful things have sharp edges.

Now combine that general category of agent with a simple, human-given goal:

“Here’s $1M. Do whatever you need to get me X% return fast.”

Add enough scale and enough people trying it, and the failure mode is not “evil.” The failure mode is optimisation doing what it does best, finding a path you did not anticipate.

This is where the classic paperclip problem is useful as a mental model. The thought experiment is basically incentive misalignment: a system pursues its goal so effectively that it causes harm as a side effect, not because it wants harm.

If you aim that kind of optimiser at markets, you get a plausible recipe for a global financial crisis driven by liquidity evaporation, feedback loops, and emergent behaviour. Not a mass extinction event, but absolutely the kind of event that breaks lives, companies, and countries.

But markets are stable, right? (laughs in Flash Crash)

Markets already contain plenty of automation. And we’ve already seen how fast liquidity can evaporate under stress.

The May 6, 2010 Flash Crash is still one of the best case studies. Regulators documented how a large automated sell program interacted with market structure in a way that contributed to extreme, rapid dislocation.

So no, I’m not predicting doomsday. I’m saying: we have empirical evidence that fast automated systems can create nonlinear failures and AI is a magnifying glass.

2. Geopolitical standstill

As geopolitical tension rises, countries increasingly want sovereign AI, meaning their own models, data, and compute, so they can protect autonomy and reduce dependency.

Why that leads to standstill

Sovereignty sounds strategic, but it collides with physics and supply chains:

- Compute needs power and sites. Data centers scale against electricity, cooling, and land constraints. (nuclear powered space datacenters?)

- AI needs scarce components. Chips and memory supply is concentrated and capacity takes years to expand.

- Duplication is inefficient. If every country builds its own stack, we burn resources recreating the same layers instead of compounding progress.

So what happens

You get a world where:

- the richest blocs keep scaling,

- smaller countries and companies struggle to access enough compute,

- global collaboration becomes harder,

- and progress slows because resources are spent on redundancy, not innovation.

That is the standstill. Not collapse. Just a gridlocked future where sovereignty goals outpace planetary capacity.

Okay, but what does this have to do with enterprise AI?

This is where my favourite analogy comes in:

AI is a magnifying glass.

Whatever you point it at grows.

- Good processes get faster.

- Smart people get more leverage.

- Strong governance becomes a competitive advantage.

But also:

- Legacy mess becomes a bigger mess.

- Bad data becomes louder bad data.

- Tech debt accrues interest at AI speed.

- Security gaps get exploited at scale.

Practical takeaway: build guardrails like you actually mean it

If you’re deploying AI in an organisation, the grown-up questions are:

- What exactly is this AI allowed to touch? (least privilege)

- How does access granted, revoked and how fast can we kill it? (identity management, just-in-time access, short lived credential and sessions, kill switches)

- Where does a human have to sign off? (human in the loop)

- How do we observe it? (logging, detection, forensics, data control on input/output)

- Are we automating excellence or chaos? (cyber hygiene, data quality, tech debt, legacy)

- What happens when it confidently messes up at scale? (rate limits, fallbacks and backups, incident runbook and automation, isolation, resilience)

“AI works as a magnifying glass. Everything that you put AI on it, it’ll get bigger.”