AI didn’t invent cyber war but it just gave it a jet engine 🚀

I keep seeing two bad takes about AI in cyber: “It’s overhyped” and “it’s going to end civilisation.”

Both miss the real story.

AI is a force multiplier inside a world that is already brittle. It makes deception cheaper, scanning faster, and response windows shorter. That is enough to change how security needs to operate.

After talking through this on Enterprise Tech Talk, I wanted a companion article that is all substance: sources, examples, and the practical implications we did not have time to unpack.

Two books, two extremes, one useful middle

When I’m trying to stay grounded in the AI conversation, I like triangulating between two very different lenses:

- Nexus: A Brief History of Information Networks from the Stone Age to AI by Yuval Noah Harari sits closer to the cautious/pessimistic end. One of the book’s core warnings (my read of it) is that power concentrates around whoever controls information flows, and AI can become an “alien” information network that scales influence without needing to be conscious or “alive.”

- The Singularity Is Nearer: When We Merge with AI by Ray Kurzweil sits closer to optimistic: humans + machines, expanded cognition, big upside, eventually…

I don’t land fully in either camp. I’m using them as bookends so I can aim for the boring, useful middle: what is happening now, what is likely soon, and what can we do about it without spiralling into sci-fi doomposting.

Cybersecurity isn’t “IT stuff” anymore. It’s infrastructure warfare.

If everything important runs on digital systems, then destabilising digital systems becomes a shortcut to destabilising societies. You don’t need bombs to create chaos, you need outages.

A concrete example: right as Russia invaded Ukraine, a cyberattack hit Viasat’s KA-SAT network, knocking tens of thousands of modems offline and causing spillover effects across parts of Europe. The EU explicitly described the timing and disruptive impact in its statement.

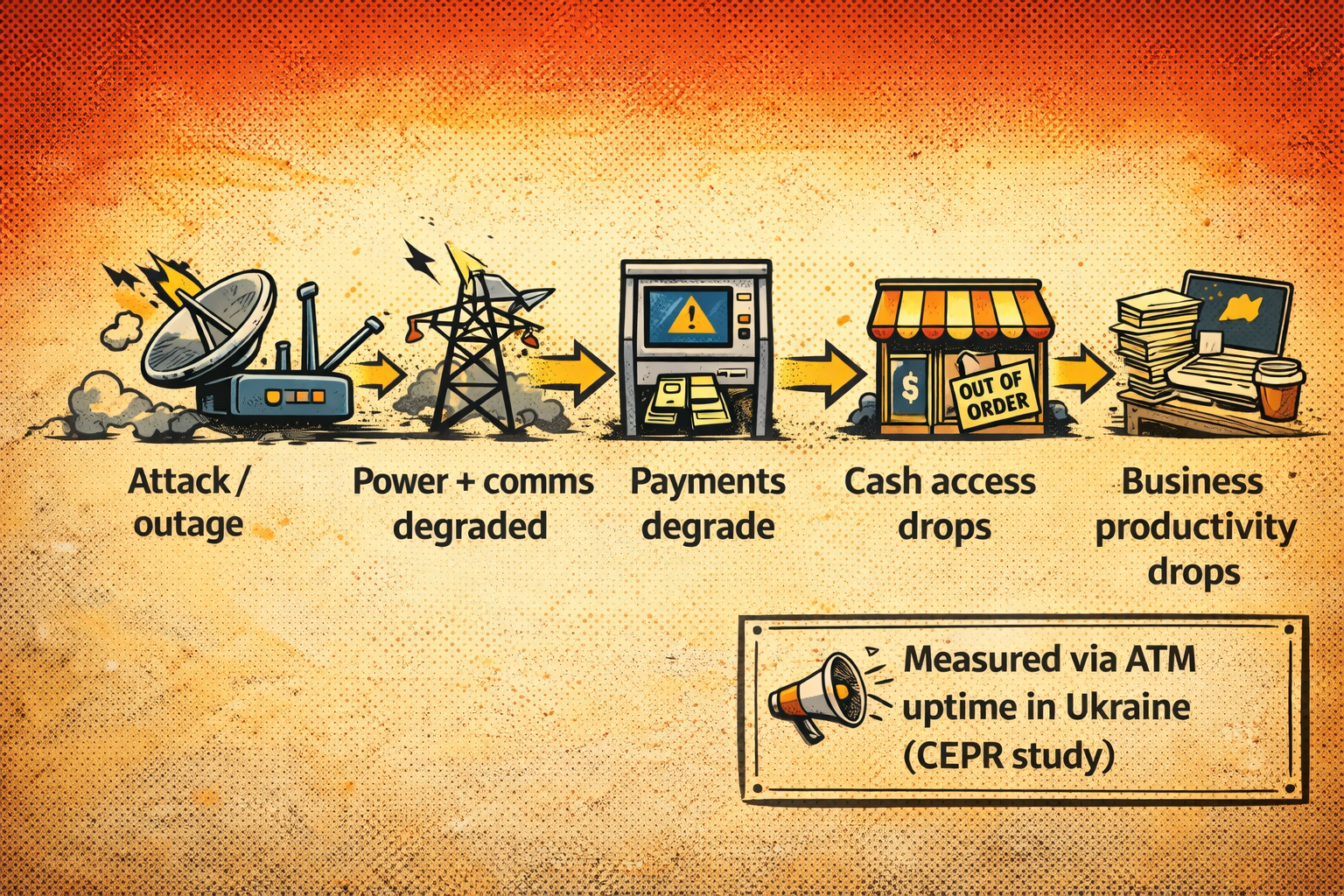

Another example that stuck with me is a research that used ATM telemetry to measure the economic impact of attacks on Ukraine’s power grid. Researchers tracked around 500 ATMs across regions, because an ATM going offline is a very literal signal of “power and connectivity are down”. The study describes how attacks culminated in late November 2022, including the 23 November strike that led to a nationwide blackout and about 55% productive time being lost the next day. It also notes the knock-on effect: remove power and cash access collapses with it. Payments and logistics do not gracefully degrade, they cliff-dive.

Around the same period, destructive malware (“wipers”) like WhisperGate and HermeticWiper were used against Ukrainian organisations to destroy systems—not steal data, not ransom, just break things.

That’s the context for the next step: AI doesn’t need to be a super-intelligence to be strategically dangerous. It just needs to scale existing tactics.

“AI in cyber” is mostly an accelerant (and that’s already enough)

AI is already being used at scale for:

- Disinformation and influence: faster, cheaper creation of believable synthetic content, including deepfakes.

- Social engineering at volume: better phishing lures, faster targeting, multilingual persuasion. (Not magic, just speed + iteration)

- Adaptive malware experiments: This is the part people underestimate. We’re starting to see early examples of malware that uses LLMs during execution to regenerate/obfuscate itself (experimental, but directionally important).

The threat actor pyramid (and why you still get hit even if you’re “not a target”)

A simple model I use is a four-tier pyramid:

Nation states → organised crime → hacktivists → opportunists

At the top, capability is expensive and strategic. But tools and tactics don’t stay exclusive forever. Once techniques get observed, copied, packaged, and sold, they trickle down.

That’s how you end up with a weird reality where:

- A nation-state operation burns a vulnerability

- then it becomes a public incident

- then vendors patch

- then criminals weaponise what’s left in the gap

- and suddenly every security team on Earth is patching like it’s a fire drill.

This is why geopolitical tension matters to regular companies: you’re collateral.

“The basics” are still the game (and the numbers prove it)

In Australia, ASD’s ACSC received 84,700 cybercrime reports in FY2024–25, roughly one report every six minutes.

That doesn’t mean “one breach every six minutes”, but it does support the bigger point: the volume is relentless, and most organisations are still fighting the fundamentals.

One more datapoint that feels like the future arriving early

An autonomous security testing system called XBOW reportedly reached #1 on a public bug bounty leaderboard, essentially outperforming humans in at least one narrow slice of vulnerability discovery. And we don’t need AGI for that.

Practical takeaway: treat AI like a force multiplier

If you’re leading security (or honestly any digital function), here’s the grounded version:

- Assume volume goes up. Attacks get cheaper to generate and faster to iterate. Strong hygiene is more important than ever before.

- Assume your comms matter more. Disinformation isn’t just politics; it’s brand, fraud, and trust.

- Double down on resilience. Outages (cyber or not) are part of the modern threat model.

- Patch latency becomes a strategic risk. The “gap” between disclosure and remediation is where the chaos lives.

“AI just makes everything more accessible… it just makes the barrier for entry for cyber criminals as low as anything else.”