A 'Watch Dogs' World is Closer Than You Think: My reflections about AI, Surveillance, and Your Privacy

Alright, last time we chatted about how AI is actually helping stretched security teams by tackling tedious tasks. But let's be honest, there's another side to the AI coin, right? That slightly unsettling feeling... like our tech knows a bit too much about us.

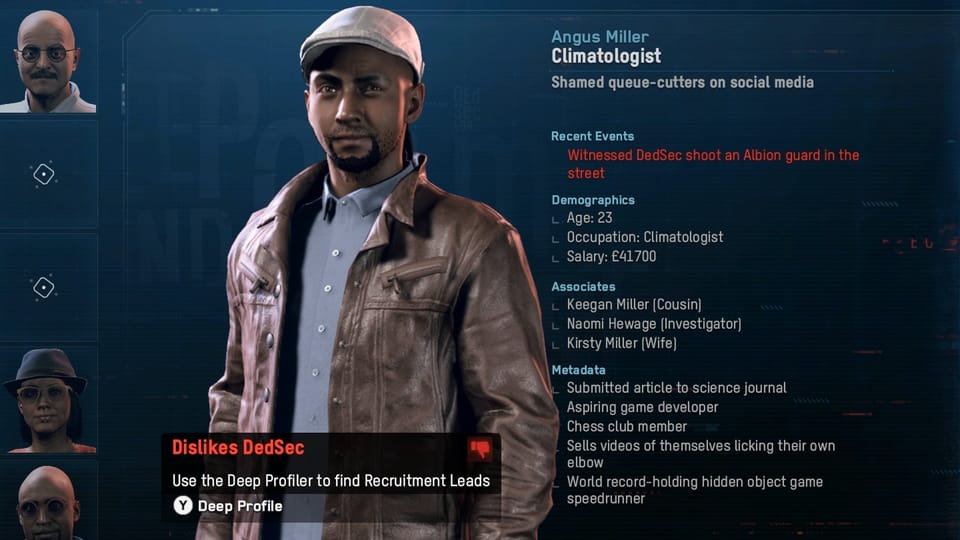

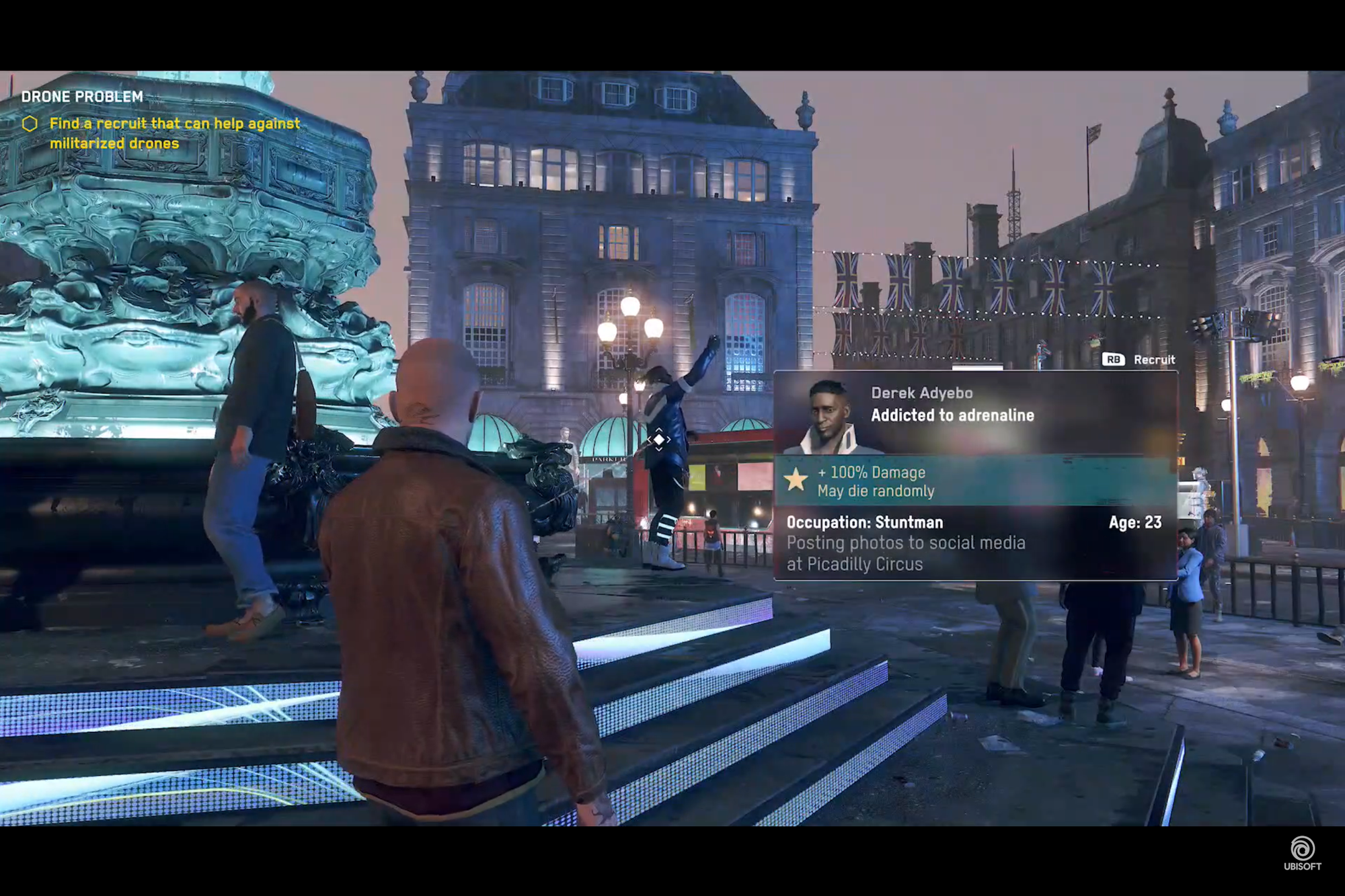

Ever get that uncanny valley vibe when an ad pops up for something you just talked about? You're not alone. This growing unease around AI and privacy is real. Interestingly, a video game series, Watch Dogs, explored this hyper-connected, always-watching world years ago. While it was fiction, featuring a city run by a single, all-seeing system called 'ctOS', it tapped into anxieties that feel incredibly relevant today,

And like a Black Mirror episode is about to become reality. What are the real privacy concerns with AI right now, and how close are we to that sci-fi vision?

AI Isn't Just Watching, It's Analysing

Think beyond basic CCTV. Artificial intelligence is supercharging surveillance. Modern AI, especially sophisticated models that understand both images and language, can analyse video feeds in real-time, identify objects, track movements, and even try to interpret behaviour.

A key part of this is Facial Recognition Technology (FRT). It's being rolled out by governments and companies for everything from unlocking phones to identifying people in crowds. Now, the tech has its uses, but it's also sparked major concerns:

- Accuracy Issues: Studies (like those by NIST in the US) have shown some FRT systems are less accurate for people with darker skin tones, women, and older individuals. This isn't just a glitch; it's led to real-world cases of wrongful arrests overseas, disproportionately affecting minority groups.

- Erosion of Anonymity: Even perfectly accurate FRT raises questions. Do we want a future where our movements in public spaces can be easily tracked and logged? It changes the feeling of simply being able to go about your day unnoticed.

When Data Reflects Our Flaws

Here’s a crucial point: AI learns from the data we feed it. And if that data – scraped from the internet, historical records, etc. – reflects existing societal biases (like racism or sexism), the AI often learns and can even amplify those biases.

It’s usually not intentional malice from the AI, but the impact is real:

- FRT Misidentification: As mentioned, biases in training data contribute to accuracy differences across demographics.

- Hiring Tools: Remember Amazon's trial AI that penalised resumes mentioning "women's" groups? It learned from past hiring patterns.

- Predictive Policing: Tools used overseas to predict crime hotspots or 'risky' individuals have faced heavy criticism for potentially reinforcing existing inequalities in policing.

It’s a reminder that just because a decision is "data-driven" by AI, doesn't automatically make it fair. We need to be critical about the data sources and the assumptions built into these systems.

Our Data: The Fuel Powering the AI Engine

All this AI wizardry runs on data – mountains of it. Often, our personal data. Think about the information collected by:

- Smartphone apps (location, contacts)

- Website cookies and trackers

- Social media activity

- Even publicly available information scraped online

Often, this happens without our full, informed consent (who really reads those T&Cs?). AI then analyses this data to build incredibly detailed profiles, inferring things like our health status, financial situation, political views, and habits – things we might never explicitly share, and they can be scarily accurate now.

The risks?

- Data Breaches: These detailed profiles are goldmines for criminals.

- Manipulation: Insights can be used for highly targeted advertising or even influencing opinions.

- Discrimination: Potential for unfair treatment in areas like insurance, lending, or job opportunities based on inferred data.

So, Was Watch Dogs Just a Game?

This brings us back to Watch Dogs. The game's 'ctOS' – a single system controlling the city and knowing everything about everyone – isn't quite our reality. Our tech world is more fragmented.

But the themes? They hit closer to home.

- The potential for pervasive surveillance? Check.

- Data being used to profile, judge, and potentially control people? Increasingly, check.

- Concerns about power being concentrated in the hands of those who control the tech? Absolutely.

Watch Dogs might be fiction, but it serves as a powerful allegory, a warning sign about the potential downsides of unchecked technological advancement and data collection and how close we are from this reality now. It highlights the constant tension between convenience, security, and our fundamental right to privacy.

Where Do We Go From Here?

Navigating this is tricky. AI offers incredible benefits, as we discussed previously, but we can't ignore the privacy implications. We need robust discussions, strong regulations (like GDPR, but perhaps more tailored and proactive globally), and a commitment to ethical development. Transparency, fairness, and human oversight is critical.

It's not about stopping progress, but about steering it responsibly.